How Convex Works

Introduction

Over the past years, Convex has grown into a flourishing backend platform. We designed Convex to let builders just build and not have to worry about irrelevant details about administering backend infrastructure. Yet, curious developers have been asking us: How does Convex actually work? With our recent open source release, now is a perfect time to answer this question. Let’s jump in.

In this article, we’ll go on a tour of Convex, starting with an overview of the system. Then, we’ll focus on the system’s core state “at rest,” exploring what a Convex deployment looks like when it’s idle. We’ll then gradually introduce motion, seeing how live requests flow through the system. By the time we’re done, we’ll know the major pieces of Convex’s infrastructure, how they fit together, and the design principles underlying its construction.

Overview

Let’s get started with Convex by deploying James’s swaghaus app from his talk “The future of databases is not just a database.” This small demo app has a store where users can add items to their shopping cart, and items have a limited inventory. Users can’t add out-of-stock items to their shopping cart.

Deploying

Let’s start by git clone'ing the repository and deploying to Convex and our hosting platform1.

Our codebase has two halves: the Web app starting from index.html that we build with vite build and deploy to our hosting provider and the backend endpoints within convex/ that we push with convex deploy to the Convex cloud.

At its heart, a Convex deployment is a database that runs in the Convex cloud. But, it’s a new type of database that directly runs your application code in the convex/ folder as transactions, coupled with an end-to-end type system and consistency guarantees via its sync protocol.

Put another way, the most important thing to understand about Convex is that it’s a database running in the cloud that runs client-defined API functions as transactions directly within the database.

Serving

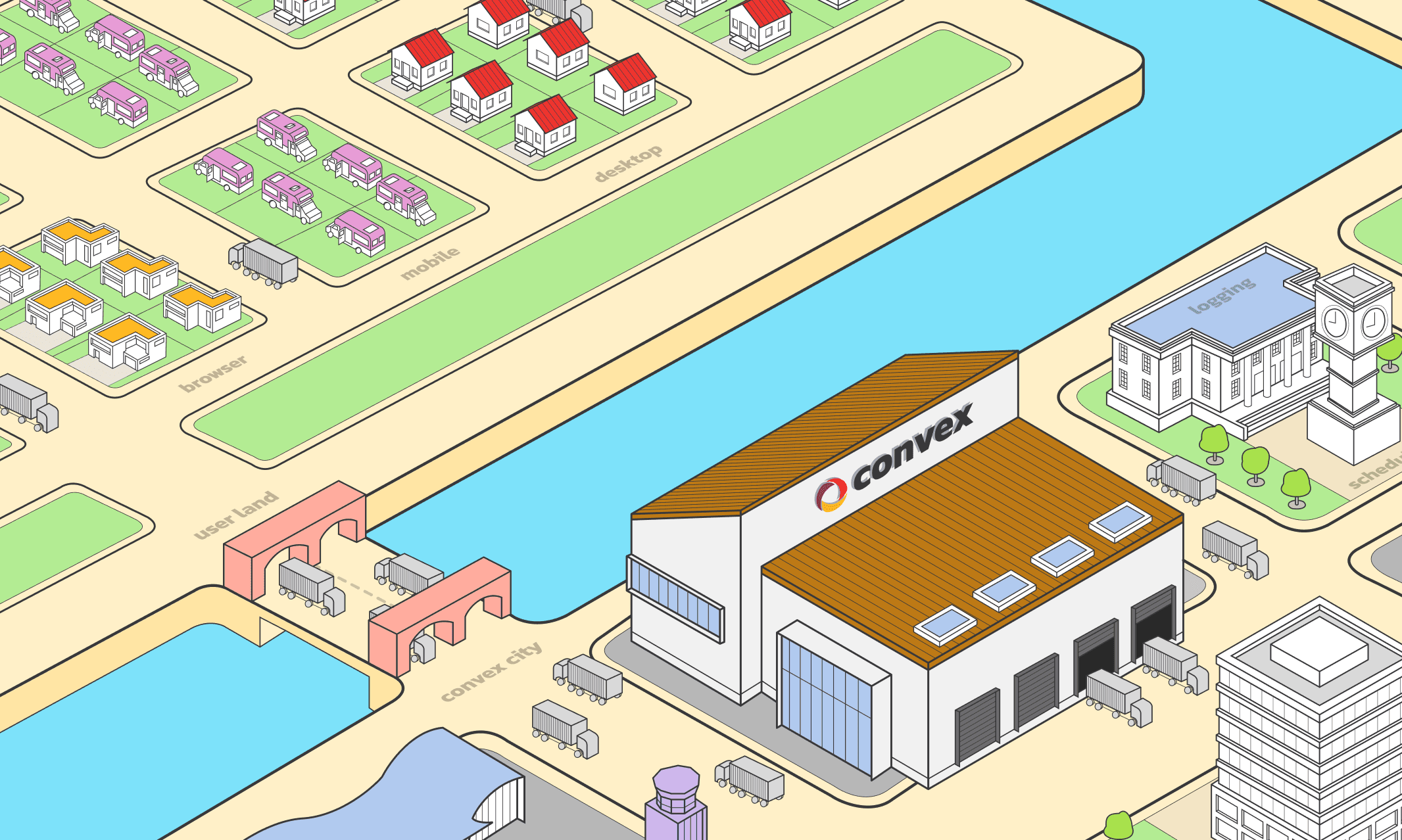

Now that we’ve deployed our app, let’s serve some traffic! Here’s the high-level architecture diagram.

Visiting our app at https://swaghaus.netlify.app creates a WebSocket connection to our Convex deployment for executing functions on the server and receiving their responses. Let’s open up that opaque Convex deployment box and see what’s inside.

There are three main pieces of a Convex deployment: the sync worker, which manages WebSocket sessions from clients, the function runner, which runs the functions in our convex/ folder, and the database, which stores our app’s state.

Convex at rest

We’ll start with the Convex deployment quietly at rest, right after deploying our app but before we’ve served any traffic. Let’s take a closer look at our convex/ folder. There are two primary pieces: functions and schema.

Functions

All public traffic to a Convex app’s backend must flow through public functions registered with query, mutation, and action from convex/server. In Swaghaus, we have a query function getItems that lists all items in the store that still have some stock remaining:

1// convex/getItems.ts

2import { query } from "./_generated/server";

3

4export default query({

5 args: {},

6 handler: async ({ db }) => {

7 const items = await db

8 .query("items")

9 .withIndex("remaining", (q) => q.gt("remaining", 0))

10 .collect();

11 return items;

12 },

13});

14Then, whenever the user adds an item to their cart, they call the addCart mutation. We’ve left out a few parts of this function for brevity, but you can always see the full source on GitHub.

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity

6// in stock.

7export default mutation({

8 args: { itemId: v.id("items") },

9 handler: async ({ db }, args) => {

10 // Check the item exists and has sufficient stock.

11 const item = await db.get(args.itemId);

12 if (item.remaining <= 0) {

13 throw new Error(`Insufficient stock of ${item.name}`);

14 }

15

16 // Increment the item's count in cart.

17 const cartItem = await db

18 .query("carts")

19 .withIndex("user_item", (q) =>

20 q.eq("userToken", userToken).eq("itemId", args.itemId)

21 )

22 .first();

23

24 // Note: We're leaving out the code to insert the document

25 // if it isn't there already.

26 await db.patch("carts", cartItem._id, { count: cartItem.count + 1 });

27

28 // Deduct stock for item.

29 await db.patch("items", args.itemId, { remaining: item.remaining - 1 });

30 },

31});

32Our function runner uses V82 for executing JavaScript, and since V8 can’t run TypeScript directly, we bundle (or compile) the code in your convex/ directory before sending it to the server for execution3. This process also creates smaller code units that execute faster as well as source maps that help us provide high quality error backtraces.

Tables and schema

Apps can optionally specify their tables and validators for the data within them within a schema.ts file. Here’s the one for Swaghaus:

1// convex/schema.ts

2import { defineSchema, defineTable } from "convex/server";

3import { v } from "convex/values";

4

5export default defineSchema({

6 items: defineTable({

7 name: v.string(),

8 description: v.string(),

9 price: v.float64(),

10 remaining: v.float64(),

11 image: v.string(),

12 }).index("remaining", ["remaining"]),

13 carts: defineTable({

14 userToken: v.string(),

15 itemId: v.id("items"),

16 count: v.float64(),

17 }).index("user_item", ["userToken", "itemId"]),

18});

19This schema defines two tables, items and carts, along with expected fields and field types for each one. This schema also defines indexes on items and carts: More on indexes later.

Tables contain documents, which can be arbitrary Convex objects. Convex supports a slight extension of JSON that adds 64-bit signed integers and binary data4. All documents have a unique document ID that’s generated by the system and stored on the _id field5. So, our items table might contain a document that looks like…

1{

2 _id: "j970pq0asyav77fekdj08grwan6npmh1",

3 description: "Keeps you shady",

4 image: "hat.png",

5 name: "Convex Hat",

6 price: 19.5,

7 remaining: 11,

8}

9-

Aside: Day 1 Ease...

Convex doesn’t require developers to declare a schema upfront, since it’s really annoying to get started building an app and need to have everything figured out!

We designed Convex to be a schemaless document database because of the incredible success of MongoDB and Firebase. MongoDB was fantastically popular when it first came out in 2009 and for good reason! Despite having glaring implementation issues, it gave developers the experience they wanted on the Day 1 of writing their app. Inserting and querying JSON objects directly into the database is refreshing after hours of fiddling with schema definitions, pondering how to normalize a data model, or tinkering with an ORM.

-

...but also Year 2 Power.

However, in our own experience and from talking to other startup founders, we found that they often had to migrate their systems off a Day 1 database once they found some success. Whether it was systems issues like performance or data loss, missing features like migrations, or the difficulty of understanding a structureless database in a large project, these teams often had to “graduate” to a more “serious” database in a painful app rewrite.

Why should developers have to choose between Day 1 ease and Year 2 power? In programming languages, TypeScript has brought incremental typing to the masses, maintaining good ergonomics for early stage prototypes while allowing smooth adoption of rigor. Each step of rigor brings practical benefits, like better autocomplete, documentation, and catching common bugs.

Putting all of this together, that’s why we designed Convex’s database to be amenable to loose schemaless models, schema-driven relational models, and everything in between. Taking a page from game designers, we want our system to have a smooth, gradually increasing difficulty curve without any abrupt jumps.

The transaction log

So far we’ve just discussed user facing parts of our system. Let’s dive into our first implementation detail to see how Convex actually stores data internally.

The Convex database stores its tables in the transaction log, an append-only data structure that stores all versions of documents within the database. Every version of a document contains a monotonically increasing timestamp within the log that’s like a version number6. The timestamp is purely an internal detail of the log, and it isn’t included in the document’s object7.

So, our database state in Swaghaus might have a transaction log that looks like…

The transaction log contains all tables’ documents mixed together in timestamp order, where all tables share the same sequence of timestamps. Each timestamp t defines a snapshot of the database that includes all revisions up to t. In our example above, we have two snapshots of the database, each corresponding to a timestamp in the log.

Let’s say we’ve added a Convex Hat to our cart, decrementing its remaining count in items and incrementing its count in carts. Applying these updates appends two new entries to the end of the log.

With these two new entries, the snapshot of the database at time 15 has two hats in our cart and 10 hats remaining in inventory. Since these two entries have the same timestamp, their changes are applied atomically: the database state jumps from the snapshot at 14 to the snapshot at 15 without revealing the intermediate state where we’ve decremented from items but not incremented in carts.

To summarize, each modification of the database, whether it’s inserting, updating, or deleting a document, pushes an entry onto the transaction log. Pushing multiple entries at the same timestamp allows us to batch up multiple changes to the database into a single atomic unit. The log contains deltas to the database state, and applying all of the deltas up to a timestamp creates the snapshot of the database at that timestamp.

Indexes

The transaction log is a minimalist data structure: It only supports appending some entries at the end and querying the entries at a given timestamp. However, this isn’t powerful enough to access our data efficiently from queries. For example, if we’re reading a snapshot of the database at timestamp 15, we don’t know which of the entries correspond to the latest state of each document. Without further data structures, we’d have to scan the whole log from scratch to build up this snapshot.

To make querying a snapshot efficient, we build indexes on top of the log. So, to look up documents by their _id field, we can maintain an index that maps each _id to its latest value.

Whenever we push a new entry onto the transaction log, we update the index to point to our latest revisions. The log is the immutable source of truth; the index is derived data that we modify over time to help us efficiently find documents’ latest versions.

As drawn above, our index only supports finding the latest revisions at a single version of the database at timestamp 15. It turns out that it’s useful to also support queries at multiple versions for timestamps in the recent past, making our index multiversioned.

In this example, the _id index supports queries at the latest timestamp 15 but also past timestamps 14 and 138. One useful mental model of this approach is that the index is a data structure that allows efficiently mapping a point in logical time to a consistent snapshot of the state of the world at that timestamp.

Starting it up: The sync engine

We now have all the pieces to start letting some requests through our system! Let’s focus on the getItems query and addCart mutations we looked at earlier.

Concurrency and race conditions

For our Convex deployment to be Web Scale™, the backend needs to execute many getItems and addCart requests at the same time. Simply executing one function at a time is too slow for any real application9. Introducing concurrency, however, comes with its own problems. In many systems, the possibility of concurrent requests forces developers to handle race conditions where requests interact with each other in unexpected ways.

Let’s return to Swaghaus for an example of a race condition. Two users, Alice and Bob, are fighting over the last Convex Hat in our store and both call addCart at the same time.

Alice starts executing addCart and observes that there’s just one hat left. Great!

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity in stock.

6export default mutation({

7 args: { itemId: v.id("items") },

8 handler: async ({ db }, args) => {

9 // Check the item exists and has sufficient stock.

10 const item = await db.get("items", args.itemId);

11 if (item.remaining <= 0) {

12 throw new Error(`Insufficient stock of ${item.name}`);

13 }

14 // <=== Alice observes `item.remaining === 1`.

15 ...

16 },

17});

18Then, let’s say Bob’s request sneaks in and grabs the last item. Since Alice’s call to addCart hasn’t finished yet, Bob also observes that there’s one hat left, and he takes it.

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity in stock.

6export default mutation({

7 args: { itemId: v.id("items") },

8 handler: async ({ db }, args) => {

9 // Check the item exists and has sufficient stock.

10 const item = await db.get("items", args.itemId);

11 if (item.remaining <= 0) {

12 throw new Error(`Insufficient stock of ${item.name}`);

13 }

14 // <=== Alice observed `item.remaining === 1`.

15

16

17 // Increment the item's count in cart.

18 const cartItem = await db

19 .query("carts")

20 .withIndex("user_item", (q) =>

21 q.eq("userToken", userToken).eq("itemId", args.itemId)

22 )

23 .first();

24 await db.patch("carts", cartItem._id, { count: cartItem.count + 1 });

25

26 // Deduct stock for item.

27 await db.patch("items", args.itemId, { remaining: item.remaining - 1 });

28

29 // <=== Bob observed `item.remaining === 1`.

30 // and sets `item.remaining` to `0`.

31 },

32});

33We return successfully to Bob’s web app, and he’s overjoyed to have the hat in his cart. But, Alice’s run of addCart continues, still believing that there’s a hat left.

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity in stock.

6export default mutation({

7 args: { itemId: v.id("items") },

8 handler: async ({ db }, args) => {

9 // Check the item exists and has sufficient stock.

10 const item = await db.get("items", args.itemId);

11 if (item.remaining <= 0) {

12 throw new Error(`Insufficient stock of ${item.name}`);

13 }

14 // <=== Alice observed `item.remaining === 1`.

15

16

17 // Increment the item's count in cart.

18 const cartItem = await db

19 .query("carts")

20 .withIndex("user_item", (q) =>

21 q.eq("userToken", userToken).eq("itemId", args.itemId)

22 )

23 .first();

24 await db.patch("carts", cartItem._id, { count: cartItem.count + 1 });

25

26 // Deduct stock for item.

27 await db.patch("items", args.itemId, { remaining: item.remaining - 1 });

28

29 // <=== Bob observed `item.remaining === 1`.

30 // and set `item.remaining` to `0`.

31 // <=== Alice observed `item.remaining === 1`.

32 // and also set `item.remaining` to `0`.

33 },

34});

35In many databases, both Alice and Bob would get the hat in their cart, and item.remaining would be 0 in the database. Both of their orders would go through, and chaos would reign in Swaghaus’s warehouse as we tried to fill two orders with the last Convex Hat.

This anomaly is called a race condition since Alice and Bob’s requests are “racing” to complete their requests and tripping over each other’s writes. Race conditions often cause bugs in programs since it’s hard to reason about all the possible ways our code can interleave with itself. In our example, our mental model of addCart didn’t include the possibility of it pausing after performing the inventory check and letting someone else steal the hat we were looking at.

Transactions

We’re stuck between two competing goals: We want to run many functions concurrently, but we don’t want to have to reason about how concurrent function calls interact with each other. In an ideal world, we’d be able to reason about our functions as if they executed one at a time but still have the runtime performance of concurrent execution.

Transactions are the missing piece that let us have our cake and eat it too. A transaction is an atomic group of reads and writes to the database that encapsulates some application logic. Transactions extend the idea of an atomic batch of writes to our transaction log to include both reads and writes. In Convex all queries and mutations interact with the database exclusively through transactions: all of the reads and writes in a function’s execution are grouped together into an atomic transaction.

Convex ensures that all transactions in the system are serializable, which means that their behavior is exactly the same as if they executed one at a time. Therefore, developers don’t have to worry about race conditions when writing apps on Convex.

In our previous example, our inventory count bug fundamentally relied on two calls to addCart being interleaved at time, and this bug is impossible in a serializable system. So, in Convex, Alice’s transaction behaves as if it first executes to completion:

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity in stock.

6export default mutation({

7 args: { itemId: v.id("items") },

8 handler: async ({ db }, args) => {

9 // Check the item exists and has sufficient stock.

10 const item = await db.get("items", args.itemId);

11 if (item.remaining <= 0) {

12 throw new Error(`Insufficient stock of ${item.name}`);

13 }

14 // <=== Alice observes `item.remaining === 1`.

15

16 // Increment the item's count in cart.

17 const cartItem = await db

18 .query("carts")

19 .withIndex("user_item", (q) =>

20 q.eq("userToken", userToken).eq("itemId", args.itemId)

21 )

22 .first();

23 await db.patch("carts", cartItem._id, { count: cartItem.count + 1 });

24

25 // Deduct stock for item.

26 await db.patch("items", args.itemId, { remaining: item.remaining - 1 });

27

28 // <=== Alice sets `item.remaining` to `0`.

29 },

30});

31Then, Bob’s request executes to completion, failing with an insufficient stock error.

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity in stock.

6export default mutation({

7 args: { itemId: v.id("items") },

8 handler: async ({ db }, args) => {

9 // Check the item exists and has sufficient stock.

10 const item = await db.get("items", args.itemId);

11 if (item.remaining <= 0) {

12 // <=== Bob observes `item.remaining === 0`.

13 throw new Error(`Insufficient stock of ${item.name}`);

14 }

15 ...

16 },

17});

18Bob is disappointed, but our application remains correct and doesn’t make promises it can’t keep. And, importantly, we have a great developer experience where we can fearlessly write concurrent code on Convex.

-

Aside: Why serializability?

We believe that any isolation level less than serializable is just too hard a programming model for developers. It’s well-known that writing correct multithreaded code is impossibly difficult, and reasoning about concurrency doesn’t get any easier at a database’s scale. Non-serializable transactions are an extraordinarily complex abstraction that provide too little to the developer.

However, most database deployments are less than serializable! Postgres defaults to READ COMMITTED, and MySQL defaults to REPEATABLE READ; these lower isolation levels expose many concurrency-based anomalies to developers. In our experience, many developers think they’re getting more than they actually are from their database, and their applications have subtle latent bugs that only show up at scale.

Let’s now dive into how Convex implements serializability and gets the best of both worlds: Transactions can run in parallel and commit independently when they don’t conflict with each other, and the system can process many requests per second. We provide the abstraction of one transaction happening at a time while also providing the throughput of a concurrent database.

Read and write sets

Convex implements serializability using optimistic concurrency control. Optimistic concurrency control algorithms don’t grab locks on rows in the database. Instead, they assume that conflicts between transactions are rare, record what each transaction reads and writes, and check for conflicts at the end of a transaction’s execution10.

Transactions have three main ingredients: a begin timestamp, their read set, and their write set. Let’s return to Alice’s call to addCart to see how this works.

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity in stock.

6export default mutation({

7 args: { itemId: v.id("items") },

8 handler: async ({ db }, args) => {

9 // <=== Alice starts a transaction at timestamp 16.

10

11 // Check the item exists and has sufficient stock.

12 const item = await db.get("items", args.itemId);

13 ...

14 },

15});

16The first step to executing a transaction is picking its begin timestamp (16 in our example). This timestamp chooses a snapshot of the database for all reads during the transaction’s execution. It never changes during execution, even if there are concurrent writes to the database.

Let’s continue execution until we hit db.get(itemId), which looks up itemId in the ID index. After querying the index, we record the index range we scanned in the transaction’s read set. The read set precisely records all of the data that a transaction queried.

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity in stock.

6export default mutation({

7 args: { itemId: v.id("items") },

8 handler: async ({ db }, args) => {

9 // Check the item exists and has sufficient stock.

10 const item = await db.get("items", args.itemId);

11

12 // <=== Alice queries `itemId` from `items`'s ID index at timestamp 16.

13 // Alice inserts `get(items, itemId)` into the read set.

14

15 if (item.remaining <= 0) {

16 throw new Error(`Insufficient stock of ${item.name}`);

17 }

18

19 // Increment the item's count in cart.

20 const cartItem = await db

21 .query("carts")

22 .withIndex("user_item", (q) =>

23 q.eq("userToken", userToken).eq("itemId", args.itemId)

24 )

25 .first();

26 ...

27 },

28});

29As we proceed through addCart, we query carts as well and record the index range q.eq("userToken", userToken).eq("itemId", itemId) in the read set too. Let’s continue to the db.patch call, where we first update the database.

1// convex/addCart.ts

2import { v } from "convex/values";

3import { mutation } from "./_generated/server";

4

5// Moves item to the given shopping cart and decrements quantity in stock.

6export default mutation({

7 args: { itemId: v.id("items") },

8 handler: async ({ db }, args) => {

9 // Check the item exists and has sufficient stock.

10 const item = await db.get("items", args.itemId);

11 if (item.remaining <= 0) {

12 throw new Error(`Insufficient stock of ${item.name}`);

13 }

14 // Increment the item's count in cart.

15 const cartItem = await db

16 .query("carts")

17 .withIndex("user_item", (q) =>

18 q.eq("userToken", userToken).eq("itemId", args.itemId)

19 )

20

21 .first();

22 await db.patch("carts", cartItem._id, { count: cartItem.count + 1 });

23 // <=== Alice increments `cartItem.count`.

24

25 // Deduct stock for item.

26 await db.patch("items", args.itemId, { remaining: item.remaining - 1 });

27

28 },

29});

30Updates to the database don’t actually write to the transaction log or indexes immediately. Instead, the transaction accumulates them in its write set, which contains a map of each ID to the new value proposed by the transaction. In our example, the two calls to db.patch insert new versions of the cartItem._id and itemId documents into the write set.

Let’s summarize the state of our transaction once we’re done executing addCart:

- Begin timestamp:

16 - Read set:

{ get("items", itemId), query("carts.user_item", eq("userToken", userToken), eq("itemId", itemId)) } - Write set:

{ cartItem._id: { ...cartItem, count: cartItem.count + 1 }, itemId: { ...item, remaining: item.remaining - 1 } }

Commit protocol

The committer in our system is the sole writer to the transaction log, and it receives finalized transactions, decides if they’re safe to commit, and then appends their write sets to the transaction log.

Let’s process Alice’s finalized transaction. The committer starts by first assigning a commit timestamp to the transaction that’s larger than all previously committed transactions. Let’s say in our example that a few transactions have committed concurrently with Alice’s call to addCart at timestamps 17 and 18, so our commit timestamp will be 19.

We can check whether it’s serializable to commit our transaction at timestamp 19 by answering the question, “Would our transaction have the exact same outcome if it executed at timestamp 19 instead of timestamp 16?”11. One way to answer this question would be to rerun addCart from scratch at timestamp 19, but then we’d lose all concurrency in our system, returning to running just one transaction at a time.

Instead, we can check whether any of the writes between the begin timestamp and commit timestamp overlap with our transaction’s read set. For each log entry in this range, we see if the write would have changed the result of either our get("items", itemId) or query("carts.user_item", ...) reads.

If none of these writes overlap, then database will look exactly the same whether addCart executed at timestamp 16 or the present timestamp 19. So, we can just pretend the addCart transaction actually happened at timestamp 19. This process of choosing a safe commit timestamp is typically referred to as “serializing” the transaction. What it means in practice is that multiple transactions are able to run safely simultaneously, and the final database state will look like they happened one at a time.

Assuming we didn’t find any overlapping writes, the committer pushes the transaction’s write set onto the transaction log and returns successfully to the client.

If, however, we found a concurrent write that overlapped with our transaction’s read set, we have to abort the transaction. We rollback its writes by discarding its write set, and the committer throws an “Optimistic Concurrency Control” (or “OCC”) conflict error to the function runner. This error signals that the transaction conflicted with a concurrent write and needs to be retried. The function runner will then retry addCart at a new begin timestamp past the conflict write. We’ll see in a bit why it’s always safe for the function runner to retry this mutation.

Subscriptions

At this point, we’ve learned how Convex uses a custom-built database to provide strong consistency and high transaction processing throughput. But wait, there’s more! We can also use read sets for implementing realtime updates for queries, where a user can subscribe to the result of a query changing.

Let’s return to our query getItems, which finds all items with remaining inventory. We track read sets when executing queries just like we do in mutations.

1// convex/getItems.ts

2import { query } from "./_generated/server";

3

4export default query({

5 args: {},

6 handler: async ({ db }) => {

7 const items = await db

8 .query("items")

9 .withIndex("remaining", (q) => q.gt("remaining", 0))

10 .collect();

11 // <=== Read set: `{ query(items.remaining, gt("remaining", 0)) }`

12 return items;

13 },

14});

15Queries don’t go through the commit protocol, since they don’t have any writes, but we can use their read sets for implementing subscriptions. Let’s return to our React app to see how this works.

1// components/Items.tsx

2import { api } from "../convex/_generated/api";

3import { useQuery } from "convex/react";

4import { Item } from "./Item";

5

6export function Items() {

7 const items = useQuery(api.getItems.default) ?? [];

8

9 return (

10 <div>

11 {items.map((item) => (

12 <Item item={item} key={item._id.toString()} />

13 ))}

14 </div>

15 );

16}

17Our client app lists the available items and renders an <Item/> for each one. After fetching the initial list of items, we’d like the component to live update any time the set of available items changes. In Convex, this is entirely automatic!

After running getItems and rendering its return value, we keep track of its read set in the client’s WebSocket session within the sync worker. Then, we can efficiently detect whether the query’s result would have changed using the exact same algorithm the committer uses for detecting serializability conflicts: Walk the log after the query’s begin timestamp and see if any entry overlaps.

If no entry overlaps, the subscription is still up-to-date, and we wait for new commit entries to show up. Otherwise, we know that the database rows read by the query have changed, and the sync worker reruns the function and pushes its updated return value to the client.

Implemented naively, this would imply that every query for every client would scan the transaction log independently, causing us to waste a lot of work rereading the transaction log many times over. Instead, we aggregate all client sessions into the subscription manager, which maintains all read sets for all active subscriptions. Then, it walks over the transaction log once and efficiently determines whether the entry overlaps with any active subscription’s read set.

Once the subscription manager finds an intersection, it pushes a message to the appropriate sync worker session, which then reruns the query. After rerunning the query, the sync worker updates the subscription manager with the query’s new read set.

Functions: Sandboxing and determinism

We made a few implicit assumptions in the previous sections that we swept under the rug:

- When we failed Alice’s transaction with an Optimistic Concurrency Control error, we assumed that it was safe to rollback all of

addCart's writes and retry it from scratch. - We assumed that the only way

getItem's return value would change is by one of its reads to the database changing.

These two assumptions form two important properties of our JavaScript runtime: sandboxing and determinism.

First, a mutation is only safe to rollback and retry if it has no external side-effects outside of its database writes buffered in the write set. We enforce the absence of side-effects by sandboxing all mutations’ JavaScript execution. This is why, for example, fetch isn’t available in mutations: if a fetch request sent an email to a user, it wouldn’t be safe to retry the mutation, which would then send the email twice.

Second, a query’s subscription is only precise if the query’s return value is fully determined by its arguments and database reads. Determinism requires sandboxing, since if we allowed queries to use fetch to access external data sources, we wouldn’t be able to know when those data sources changed. However, determinism also requires that our runtime is deterministic. For example, if a query function issues two db.get() calls concurrently, we need to ensure that Promise.race returns the same result every time the query is executed.

1export const usesRace = query({

2 handler: async (ctx, args) => {

3 const get1 = ctx.db.get("table", args.id1);

4 const get2 = ctx.db.get("table", args.id2);

5

6 // If we want `usesRace` to be deterministic, we need to return the

7 // same result from `Promise.race` on each execution, even if the

8 // underlying index reads within our database return in different

9 // orders.

10 const firstResult = await Promise.race([get1, get2]);

11 },

12)

13We implement both of these guarantees by directly using V8’s runtime and carefully controlling the environment we expose to executing JavaScript and scheduling IO operations. We’ll dive into more details for our JavaScript runtime in a future post.

We call this combination of the database, transactions, subscriptions, and deterministic JavaScript functions our sync engine. It’s the core of Convex and the most unique part of our system.

Putting it all together: Walking through a request

Okay, so now we have all the pieces in place to walk through a few requests from the client all the way to the Convex backend and back. Let’s say we load Swaghaus from our public URL, so our browser issues a GET request to https://swaghaus.netlify.app.

Executing a query

The web server serves our app’s JS, which creates the Convex client, executes our React components, and renders to the DOM.

1// src/main.tsx

2...

3const convex = new ConvexReactClient(import.meta.env.VITE_CONVEX_URL);

4

5ReactDOM.createRoot(document.getElementById("root")!).render(

6 ...

7 <ConvexProvider client={convex}>

8 ...

9 </ConvexProvider>

10);

11Creating the ConvexReactClient opens a WebSocket connection to our deployment, which is polite-quail-216.convex.cloud for my account.

Mounting our Items component registers the getItems query with the Convex client, which sends a message on the WebSocket to tell the sync worker to execute this query. The sync worker passes this request along to the function runner.

The function runner internally maintains an automatic cache of recently run functions, and if the query is already in the cache, it returns its cached value immediately12. Otherwise, it spins up an instance of the V8 runtime, begins a new transaction, and executes the function’s JavaScript.

Executing the function reads from different indexes from the database and builds up the query’s read set. After completely executing the query’s JavaScript, the function runner sends its return value along with its begin timestamp and reads back to the sync worker, which passes the result back to the client over the WebSocket. The sync worker also subscribes to the query’s read set with the subscription manager, requesting a notification whenever its result may change.

Executing a mutation

Clicking the “Add to Cart” button in Swaghaus triggers a mutation call to the server.

1// src/Item.tsx

2

3export function Item({ item }: { item: Doc<"items"> }) {

4 const addCart = useMutation(api.addCart.default);

5 ...

6 return (

7 <div className={styles.item}>

8 ...

9 <button

10 className={styles.itemButton}

11 onClick={() => addCart({ itemId: item._id })}

12 >

13 Add to Cart

14 </button>

15 ...

16 </div>

17 );

18}

19Calling addCart sends a new message on the WebSocket to run the specified mutation. As with queries, the sync worker passes this message over to the function runner.

The function runner chooses a begin timestamp and executes the function, querying database indexes as needed. Unlike queries, however, the function runner sends back the mutation transaction’s read set, write set, and begin timestamp in addition to its return value. Then, the sync worker forwards the transaction to the committer, which decides whether it’s safe to commit.

If the serializability check passes, the committer appends the writes to the transaction log.

After successfully appending to the transaction log, the committer returns to the sync worker, which then passes the mutation’s return value to the client.

Updating a subscription

Since we added a new item to our cart with addCart, our view of getItems is no longer up-to-date. The subscription worker reads our new entry in the transaction log and determines that it overlaps with the read set of our previous getItems query.

The sync worker then tells the function runner to rerun getItems, which proceeds as before.

After completing execution, the function runner returns the updated result as well as the new begin timestamp and read set to the sync worker. The sync worker pushes the updated result to the client over the WebSocket and updates its subscription with the new read set.

And that’s pretty much it! As the user navigates in their client app, the set of queries they’re interested in changes, and the sync worker handles running new and updated queries and adding and removing read sets from the subscription manager13.

Conclusion

There’s a lot we didn’t cover14, but we’ve gone over the major parts of how Convex works! Clients connect to the sync worker, which delegates running JavaScript to the function runner, which then queries the database layer. We work hard to design Convex so developers don’t have to understand its internals, but we hope it’s been interesting and relevant for advanced Convex users to understand more deeply how their apps behave. Happy building!

Footnotes

-

We’re using Vite and deploying to Netlify. We could have also used Next or a different hosting provider like Vercel, Cloudflare Pages, or AWS Amplify. ↩

-

V8 is Chrome’s high performance JavaScript interpreter. It executes code quickly with Just In Time compilation and has been hardened over years of use in Chrome, Node, Electron, and Cloudflare Workers. ↩

-

We currently use esbuild for bundling TypeScript and JavaScript in your

convex/folder. ↩ -

Defining a good cross-platform format is a lot harder than it looks.

- The JSON spec allows arbitrary precision numbers, recommends implementations use doubles, but does not support floating point special values like

NaN. This can lead to silent loss of precision when parsing JSON in JS. - A fifth of the datatypes in MongoDB’s BSON are deprecated.

- JavaScript strings can have ill-formed Unicode code point sequences with unpaired surrogate code points.

- Firebase supports both integers and floating point numbers. Inserting a number into Firebase from JavaScript will convert it to a Firebase integer if it’s a safe integer and a Firebase float otherwise. However, inserting an integer greater than

2^53from, say, a mobile client written in another language, will silently lose precision when read in JavaScript, potentially corrupting the row if JavaScript writes it back out.

- The JSON spec allows arbitrary precision numbers, recommends implementations use doubles, but does not support floating point special values like

-

Convex IDs are base32hex strings that use Crockford’s alphabet. Each ID contains a varint encoded table number, 14 bytes of randomness, a 2 byte timestamp, and a two byte version number and checksum. The randomness goes before the timestamp so writes are scattered in ID space, utilizing all shards' write throughput under range partitioning. The creation timestamp has day granularity and lets us efficiently ban ID reuse without having to keep deleted IDs around forever. ↩

-

Convex’s timestamps are Hybrid Logical Clocks of nanoseconds since the Unix epoch in a 64-bit integer. We’re using small integers in this blog post for clarity, but a real timestamp would look something like

1711750041489939313. ↩ -

Convex also includes an immutable

_creationTimefield on all documents of milliseconds since the Unix epoch in a 64-bit float. This format matches JavaScript’sDateobject. ↩ -

We don’t actually store many copies of the index. Instead, we use standard techniques for efficiently implementing “multiversion concurrency control.” See Lectures 3, 4, and 5 in CMU’s Advanced Database Systems course for an overview. ↩

-

Some systems, like Redis, actually get away with serial execution! We can compute the number of requests served per second as one over the request latency. Since Redis keeps all of its state in-memory, simple commands can execute in microseconds, which yields hundreds of thousands of requests per second. Convex, on the other hand, has its state stored on SSDs and executes JavaScript functions, yielding request latencies in the milliseconds. So, if we want to ever serve more than hundreds of requests per second, we need to parallelize. ↩

-

Our commit protocol is similar in design to FoundationDB’s and Aria’s. ↩

-

Serializability is defined to be an equivalence towards some serial ordering of the transactions. In our case, the serial ordering is determined by the totally ordered commit timestamps. So, the overlapping checks are our way of “time traveling” the transaction forward in time to its position in the serial order. ↩

-

It’s a lot faster to serve a cached result out of memory rather than have to spin up a V8 isolate and execute JavaScript. Convex automatically caches your queries, and the cache is always 100% consistent. We use a similar algorithm as the Subscription Manager for determining whether a cached result’s read set is still valid at a given timestamp. ↩

-

The sync worker additionally guarantees that all queries in the client’s query set are at the same timestamp. So, components within the UI don’t have to worry about anomalies where queries execute at different timestamps and are inconsistent. We’ll talk more about our sync protocol in a future post. ↩

-

We didn’t cover actions, auth, end-to-end type-safety, file storage, virtual system tables, scheduling, crons, import and export, text search and vector search indexes, pagination, and so on… Stay tuned for more posts! ↩

Convex is the backend platform with everything you need to build your full-stack AI project. Cloud functions, a database, file storage, scheduling, workflow, vector search, and realtime updates fit together seamlessly.