Shop Talk: Building an AI-Powered Voice-Controlled Shopping Agent with Daily Bots and Convex

Does anyone else feel overwhelmed by the flood of new AI projects and tools these days? I know I do.

I've found the best way to avoid analysis paralysis is to just dive in, start tinkering with a new tool and build something with it.

With that in mind, I'd like to show you a little demo I put together that combines a cool new AI tool called Daily Bots with Convex. It's a collaborative shopping list / todos application that you can control entirely with your voice.

Check out the demo video below, and if you're interested, I'll walk you through how I built it.

If you want to try this out yourself the demo is available here: https://convex-shop-talk.vercel.app/ and the source is available here: https://github.com/get-convex/shop-talk

Architecture

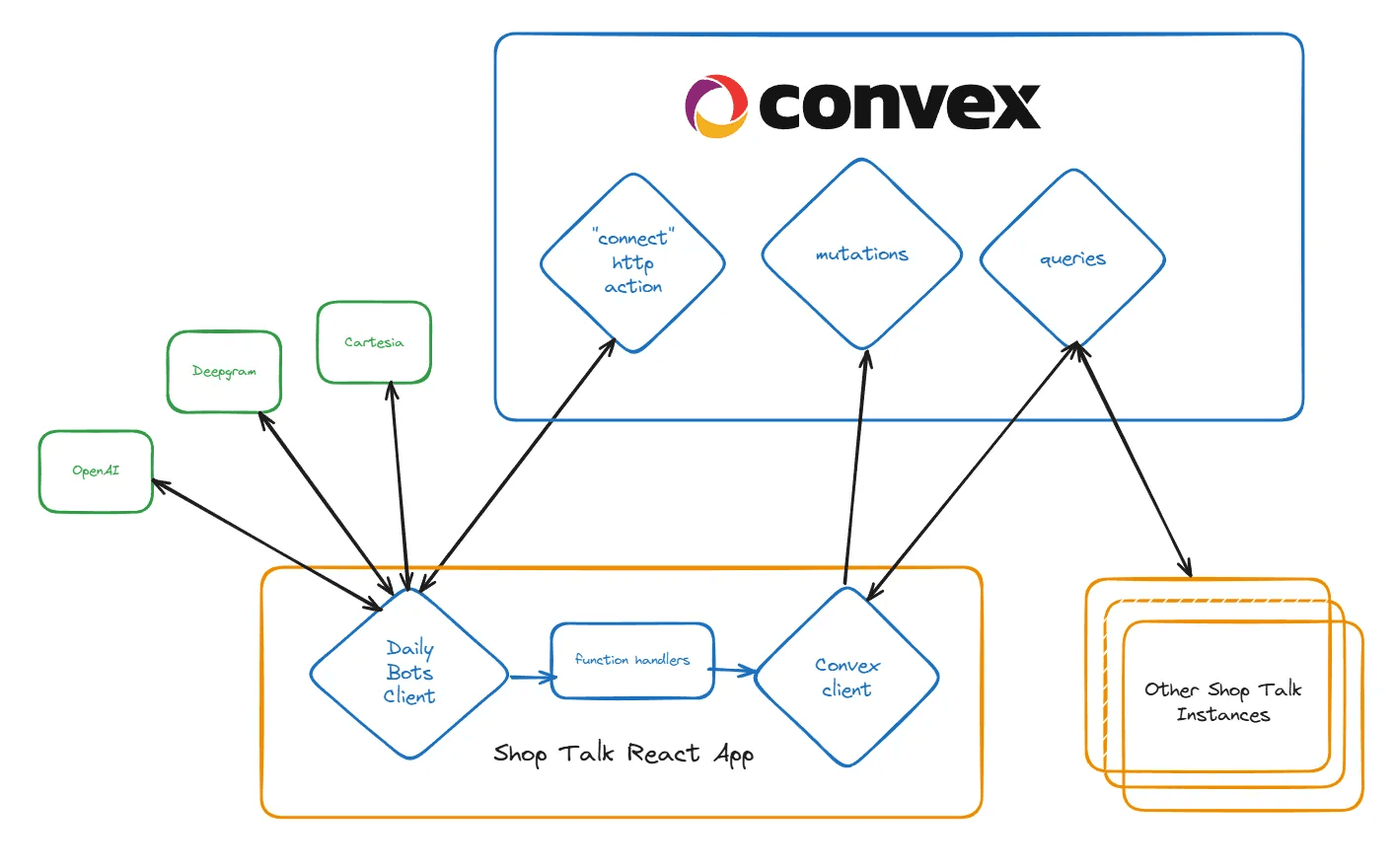

Before we begin I think it might be useful to try to give a high level view of what's going on here.

The Shop Talk app uses a Daily Bots client to enable voice controls through various services. This client triggers function handlers in our client-side app, which execute tasks on behalf of the LLM. These function handlers then communicate with Convex, which manages our state and ensures synchronization across all Shop Talk instances.

Don't worry if this isn't completely clear yet, I'll explain in more detail below.

Voice Control & Daily Bots

The headline feature of Shop Talk is the ability to totally interact with your voice.

There's a seemingly endless array of libraries and services that provide this functionality. Since it's honestly quite overwhelming, I went with one that a friend had success with: Daily Bots

Daily Bots (Pipecat under the hood) is an all-in-one platform for voice-based interactions. It combines three essential components of a voice-controlled AI assistant:

- Speech To Text (STT) - converts your spoken words into text

- LLM "brain" - processes the text, understands context, executes functions, and generates responses

- Text to Speech (TTS) - transforms the text response back into spoken words

Daily Bots handles many behind-the-scenes tasks that you'd otherwise need to manage manually, including conversation history management, call recording, and performance metrics.

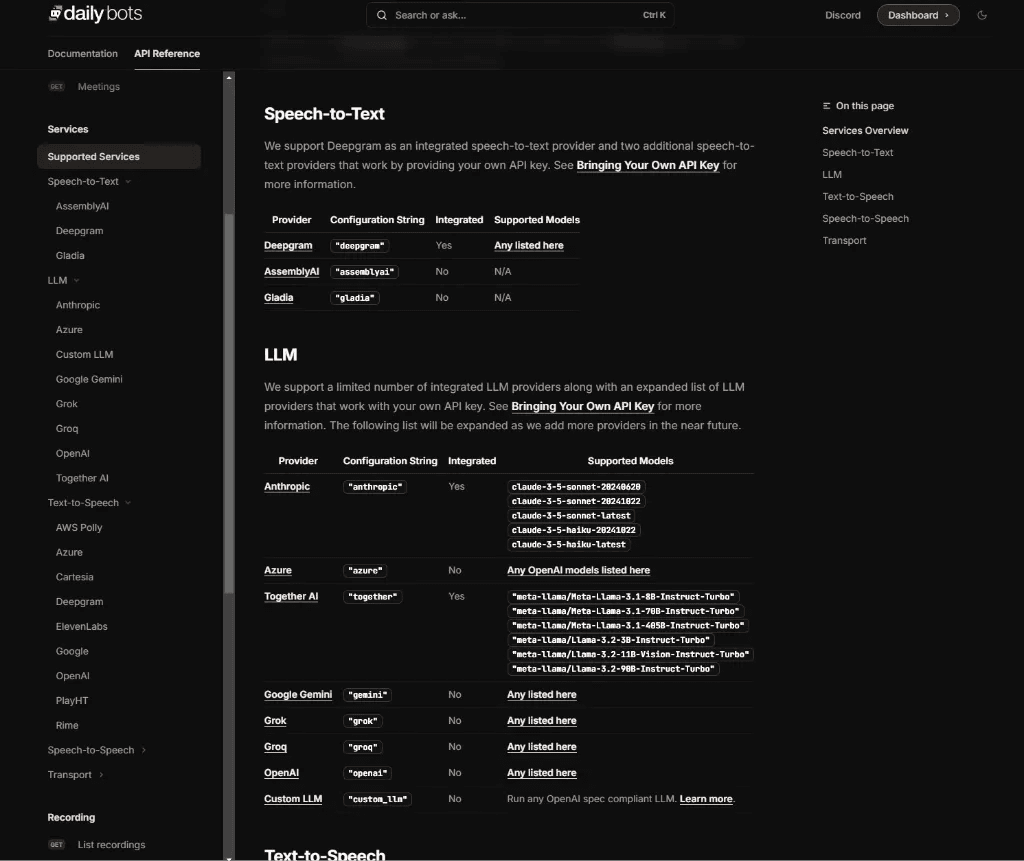

To clarify, DailyBots (Pipecat) doesn't actually implement the STT, TTS, or LLM services, it's the orchestration layer that brings them all together. You can configure your choice of services for each component, and their documentation explains this setup process well.

For this project, I used the default services recommended in their documentation:

1...

2 services: {

3 stt: "deepgram",

4 tts: "cartesia",

5 llm: "openai",

6},

7...

8If I were to turn this into a real product, I would definitely experiment with these services more to find the best combination, but for now these work well enough for this demo project.

Realtime Collaboration with Convex

Before I dive deeper into the specifics of how the Voice Control works I want to quickly talk about how Convex fits in here. For this demo I used the following Convex features:

Convex http actions

I needed HTTP actions for this project because the DailyBots client works by calling a REST endpoint when it starts up, connecting the user and initiating a session with the STT and TTS services.

1<RTVIClientProvider

2 client={

3 new RTVIClient({

4 transport: new DailyTransport(),

5 params: {

6 // this is the root url of our Convex http actions

7 // for example: https://adorable-grouse-876.convex.site

8 baseUrl: import.meta.env.VITE_CONVEX_SITE_URL,

9 endpoints: {

10 connect: "/connect",

11 action: "/actions",

12 },

13 },

14 })

15 }

16>

17 <App />

18</RTVIClientProvider>

19I wont show the connect or actions http actions here but if you are interested you can snoop around here: https://github.com/get-convex/shop-talk/blob/8d59d87c96ed56b10ff9ffbc4a03456519595e50/convex/http.ts

Convex queries and mutations

This is the bread and butter of Convex. It provides fast, type-safe ways to Create, Read, Update, and Delete lists and list items.

I won't show all the code here, you can check it out at the links above, but here's a quick example of how we return all items on a given shopping list:

1import { query } from "../_generated/server";

2import { v } from "convex/values";

3

4export const getAllOnList = query({

5 args: { listId: v.id("shoppingLists") },

6 handler: async (ctx, args) => {

7 const items = await ctx.db

8 .query("shoppingListItems")

9 .withIndex("by_listId", (q) => q.eq("listId", args.listId))

10 .collect();

11

12 return items;

13 },

14});

15The schema for this demo project is really simple:

1import { defineSchema, defineTable } from "convex/server";

2import { v } from "convex/values";

3

4export default defineSchema({

5

6 shoppingLists: defineTable({

7 name: v.string(),

8 }).searchIndex("by_name", {

9 searchField: "name",

10 }),

11

12 shoppingListItems: defineTable({

13 listId: v.id("shoppingLists"),

14 label: v.string(),

15 completed: v.boolean(),

16 })

17 .searchIndex("by_label", {

18 searchField: "label",

19 })

20 .index("by_listId", ["listId"]),

21

22});

23

24Note that this demo project doesn't include user management, to keep things simple, all shopping lists are shared among everyone.

Because of this approach, I also needed to use...

Convex Cron jobs

Every day we run a “janitor” function to reset all the state in the database

1import { cronJobs } from "convex/server";

2import { internal } from "./_generated/api";

3

4const crons = cronJobs();

5

6crons.daily(

7 "daily reset",

8 { hourUTC: 0, minuteUTC: 0 },

9 internal.janitor.dailyReset

10);

11

12export default crons;

13

14This ensures that the publicly shared lists and items don't get out of control over time, I know what you guys are like 😉

The dailyReset function is here if you want to take a look.

DailyBots Function Calling

Now that we have our server-side functionality sorted out, how does the AI actually interact with those queries and mutations?

It works through Function Calling. When you give the AI a command like "Create a new shopping list called 'date night'", it returns both a natural response (like "Sure") and some "hidden" text that tells DailyBots to execute a pre-defined function, in this case, create_shopping_list.

The AI (LLM) can do this because we provide the names and structure of these functions as part of its context when making calls.

For Shop Talk we have several functions:

1import { RTVIClientConfigOption } from "@pipecat-ai/client-js";

2

3export const functionDefinitions = {

4 create_shopping_list: {

5 name: "create_shopping_list",

6 description: "Create a new shopping list with a name and optional items",

7 parameters: {

8 type: "object",

9 properties: {

10 name: {

11 type: "string",

12 description: "The name of the shopping list",

13 },

14 },

15 required: ["name"],

16 },

17 },

18 add_items: {

19 name: "add_items",

20 description: "Add multiple items to the current shopping list at once",

21 parameters: {

22 type: "object",

23

24// ... and the rest

25These are just the “definitions” for the functions, the code that actually implements what happens when the AI decides it wants to call once of these functions is actually handled on the client in FunctionCallHandler.tsx:

1// Code edited for brevity..

2

3export const FunctionCallHandler: React.FC = () => {

4

5 // Our Convex mutation

6 const createList = useMutation(api.shoppingLists.mutations.create);

7

8 React.useEffect(() => {

9

10 // Dailybots callback to let us know the AI wants to call a function

11 llmHelper.handleFunctionCall(async (fn: FunctionCallParams) => {

12 const args = fn.arguments as any;

13 const functionName = fn.functionName as FunctionNames;

14

15 // When the AI wants to call create_shopping_list

16 if (functionName === "create_shopping_list") {

17 if (!args.name) return returnError("name is required");

18

19 // We call our Convex mutation with the name of the list to create

20 const listId = await createList({ name: args.name });

21

22 return returnSuccess(`Created shopping list "${args.name}"`, {

23 listId,

24 });

25 }

26

27// ... handling the other functions

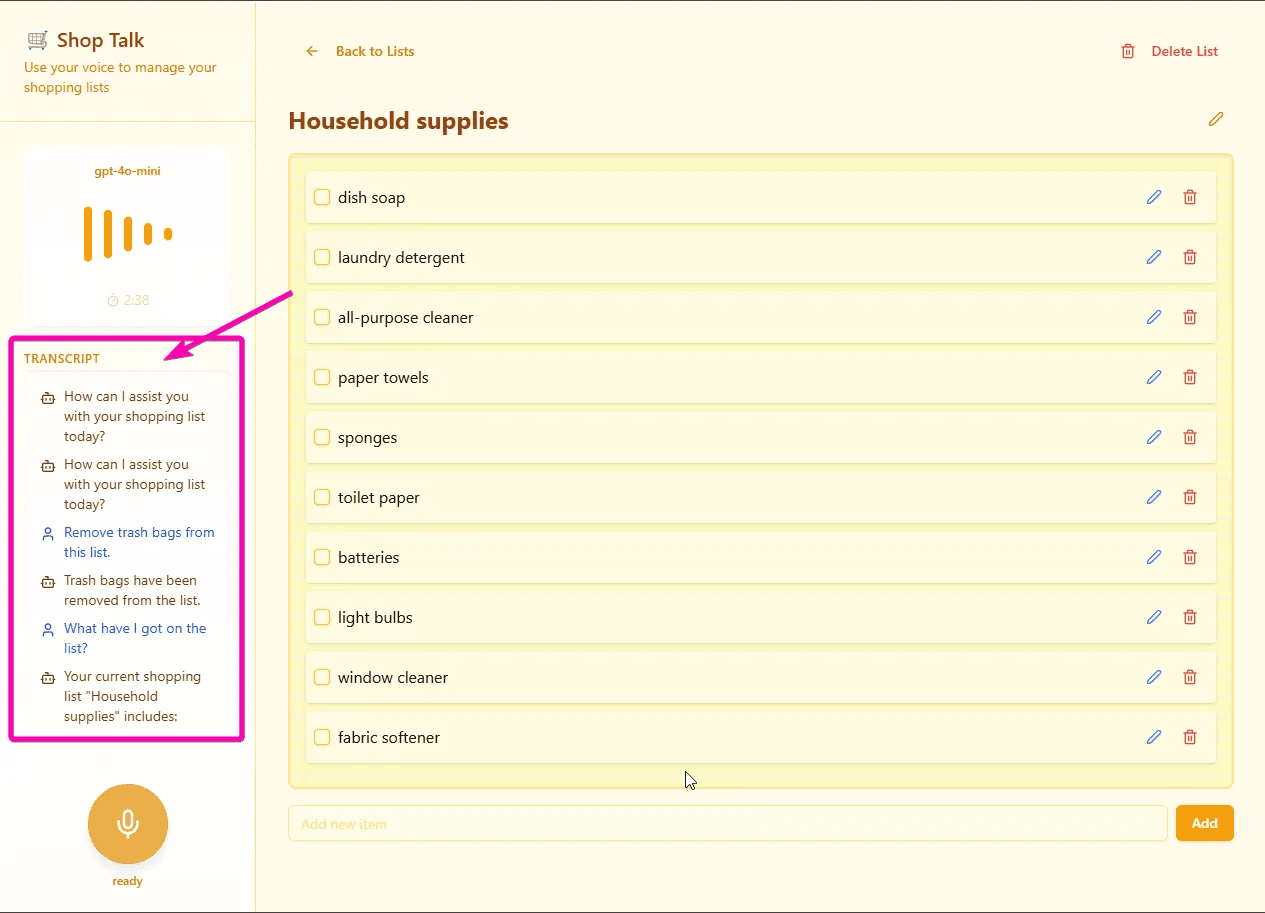

28Transcripts

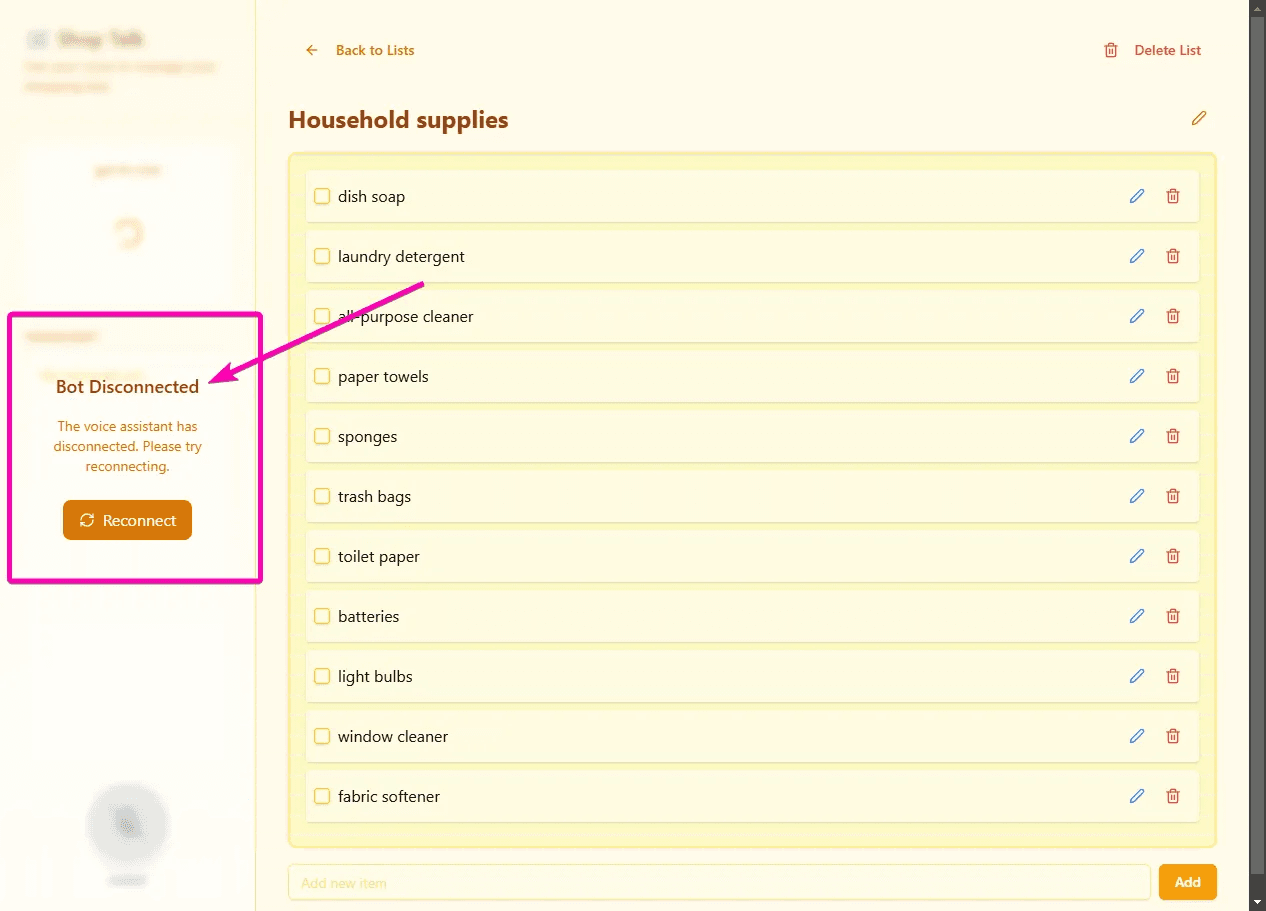

DailyBots has a nice feature built into it which emits an event whenever the Bot or User speaks, this is provided as text in the form of a transcript. I (Composer) was able to leverage this to produce a nice little transcript on the sidebar.

The transcript feature provides valuable debugging capabilities for voice recognition by showing exactly what the AI heard and how it responded. It also gives users a handy written record of their shopping list interactions for future reference.

While not essential, I thought this feature was cool enough to keep.

Cost Control

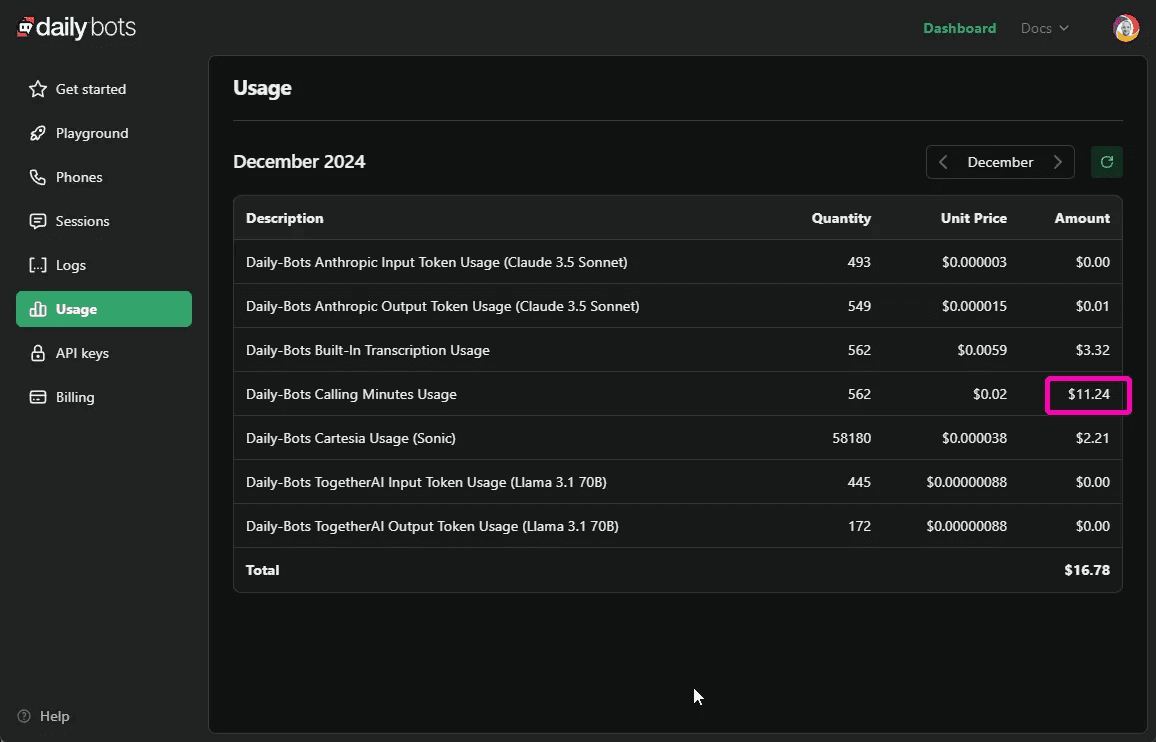

After a day of development and a team show-and-tell, I checked the DailyBots usage report and was surprised by the costs:

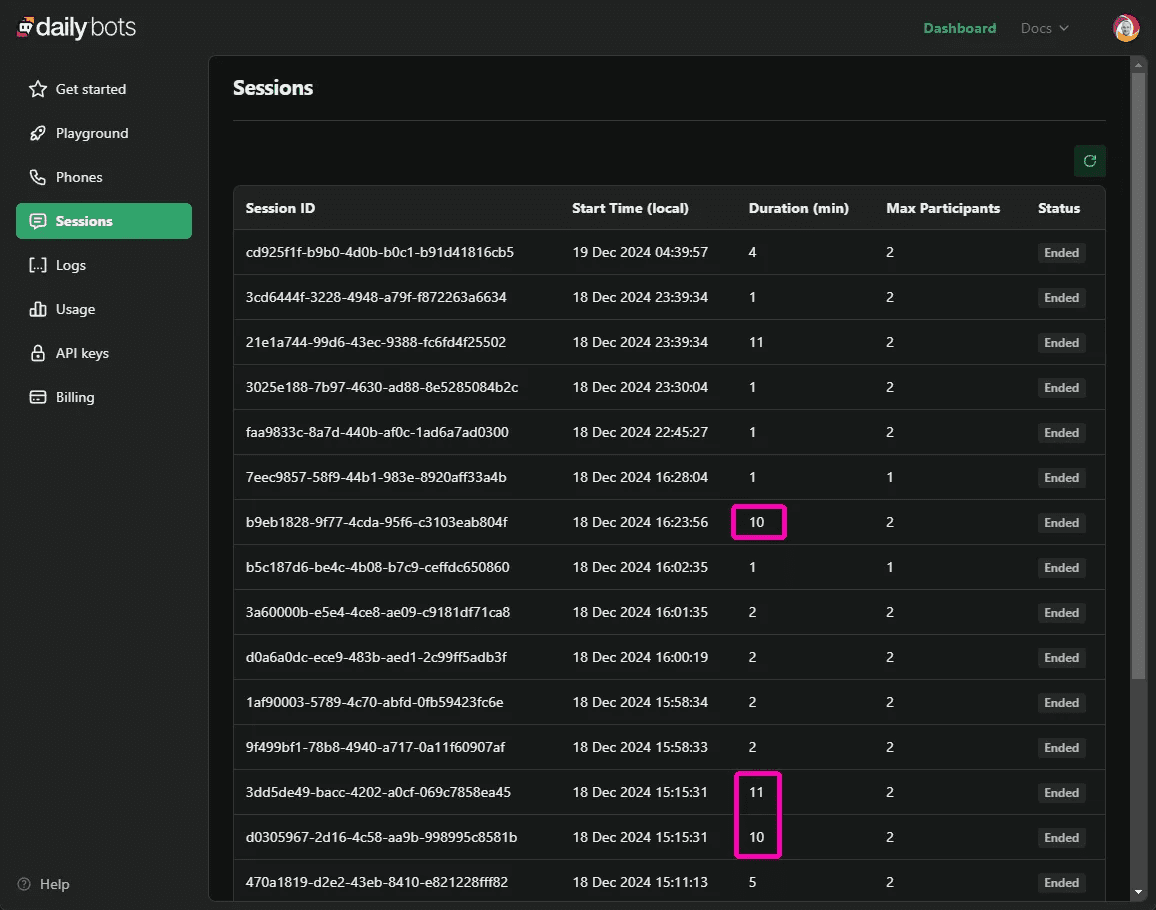

So I dug into it a bit more and noticed that there were a bunch of long sessions that were causing the high minutes usage:

This isn't surprising, since opening the tab automatically starts a DailyBots connection. Even when users mute themselves, the connection remains open and counts as a continuous "Session."

At $0.02 per minute, a 10-minute session costs 20 cents, not devastating given the functionality, but it's quite expensive from a business perspective. You'd need to be careful about pricing your application and metering usage.

To help control these potential costs, I've reduced the session length from 10 minutes to 3 minutes, with a popup that allows users to reconnect after disconnection.

It would have been nice if Daily Bots offered an option to automatically detect inactivity and disconnect or pause metering after a period of time. The cynical side of me suspects they aren't financially motivated to implement this feature however.

I will continue to monitor the costs for this and if they start to get out of control I may have to put in some rate limiting 😅

Tangent: Cursor Composer

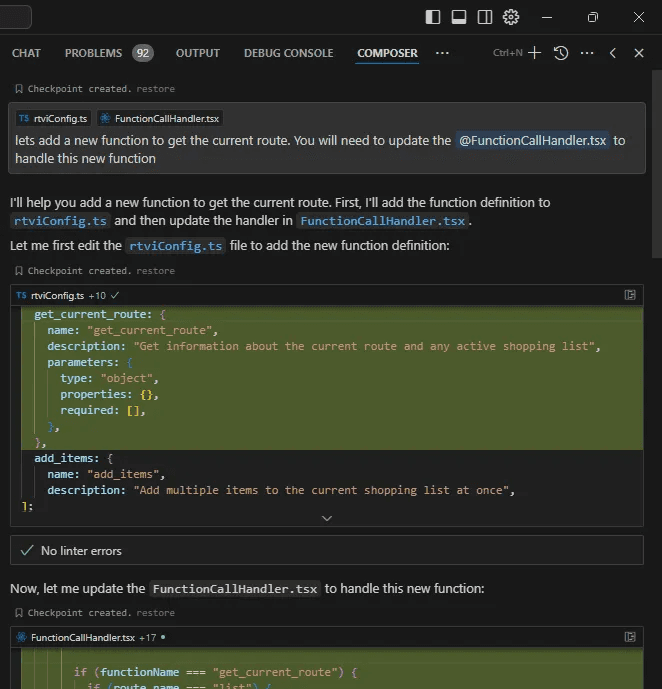

Apologies, I’m going to go on a quick tangent here to note that during the development of this demo I made copious use of Cursors new Composer. Its the first project I have used this seriously in and I have got to say, I am seriously impressed.

It really sped up the development of the project. Now that it has built in linting after codegen it is much more reliable than it has been in the past.

I think I need to dedicate an entire post to talking about just Composer and Convex but for now here are some quick thoughts:

- Great for really labor intensive tasks like “This React component is getting a little large, lets split it up into separate components in separate files”, this saved so much refactor time for me.

- I added my own rules both to the project and personal rules to Cursor itself, this is still an ongoing experiment for me. I’m not sure how much it pays attention to these as it seems to totally ignore some clear instructions sometimes.

- Cursor does an “okay” job with Convex code. It knows about how to do some of it but not others. I think there is work to be done here by me to explore the best ways to give cursor some more context on how best use Convex

- After the initial frantic prototyping phase I did using v0 and composer I did have to go through and take some time to understand and untangle a bunch of things by hand. I did this as it is important for me that the code be human-readable and easily digestible for demo purposes.

- I am not great with Tailwind so its great that Composer does more than a good enough job iterating on the magical syntax. I do wonder how it might fare in a much larger and strictly controlled project however.

Anyways enough of that tangent, lets back to Shop Talk shall we?

Is this the future?

So is real-time voice interaction going to be embedded into every app? I'm not so sure.

While it's really cool and relatively easy to implement, I worry about two main barriers. First, the current cost economics might not make sense for most web or mobile apps. Second, users have been trained to interact with apps using mouse, keyboard, or touch, getting them to switch to voice could be challenging.

Having said that, I can see these capabilities being integrated into our general assistants like Siri, Google, or Alexa. Imagine saying "I want pizza tonight, please add the ingredients to my shopping list" and having it quietly update your list in the background without requiring you to open any apps. That would be truly compelling. This makes me once again question what the future of apps looks like 🤔.

Thought on Daily Bots

Overall, I'm impressed with Daily Bots' functionality. Having developed voice-controlled apps in the past, I appreciate the effort they've put into simplifying these complex features.

However, I did encounter several issues and rough edges during development, which I'll outline below:

- The documentation needs improvement, with many broken or outdated examples and gaps in crucial areas.

- It's particularly confusing that they recommend including both

@pipecat-ai/client-reactandrealtime-aipackages. These export similar types with identical names, making VSCode auto-import and AI code generation unnecessarily difficult. - Their API design raises some questions—especially the LLMHelper, which seems overly complex. A single client object would be more straightforward than multiple helper classes.

- I encountered several issues that might be bugs or questionable design choices. For instance, when updating LLM context locally, the assistant would restart despite explicitly setting the flag to prevent interruption.

Finally

Cheers for checking this out, I hope you found it interesting! After diving in and getting hands-on experience with these AI tools, I feel much less anxious about the state of AI. If you're feeling uncertain too, I highly encourage you to jump in and try these tools and services yourself. There's nothing like direct experience to help you form your own opinions!

If you want to try this out Shop Talk the demo is available here: https://convex-shop-talk.vercel.app/ and the source is available here: https://github.com/get-convex/shop-talk

Until next time, cheerio! 👋

Convex is the backend platform with everything you need to build your full-stack AI project. Cloud functions, a database, file storage, scheduling, workflow, vector search, and realtime updates fit together seamlessly.