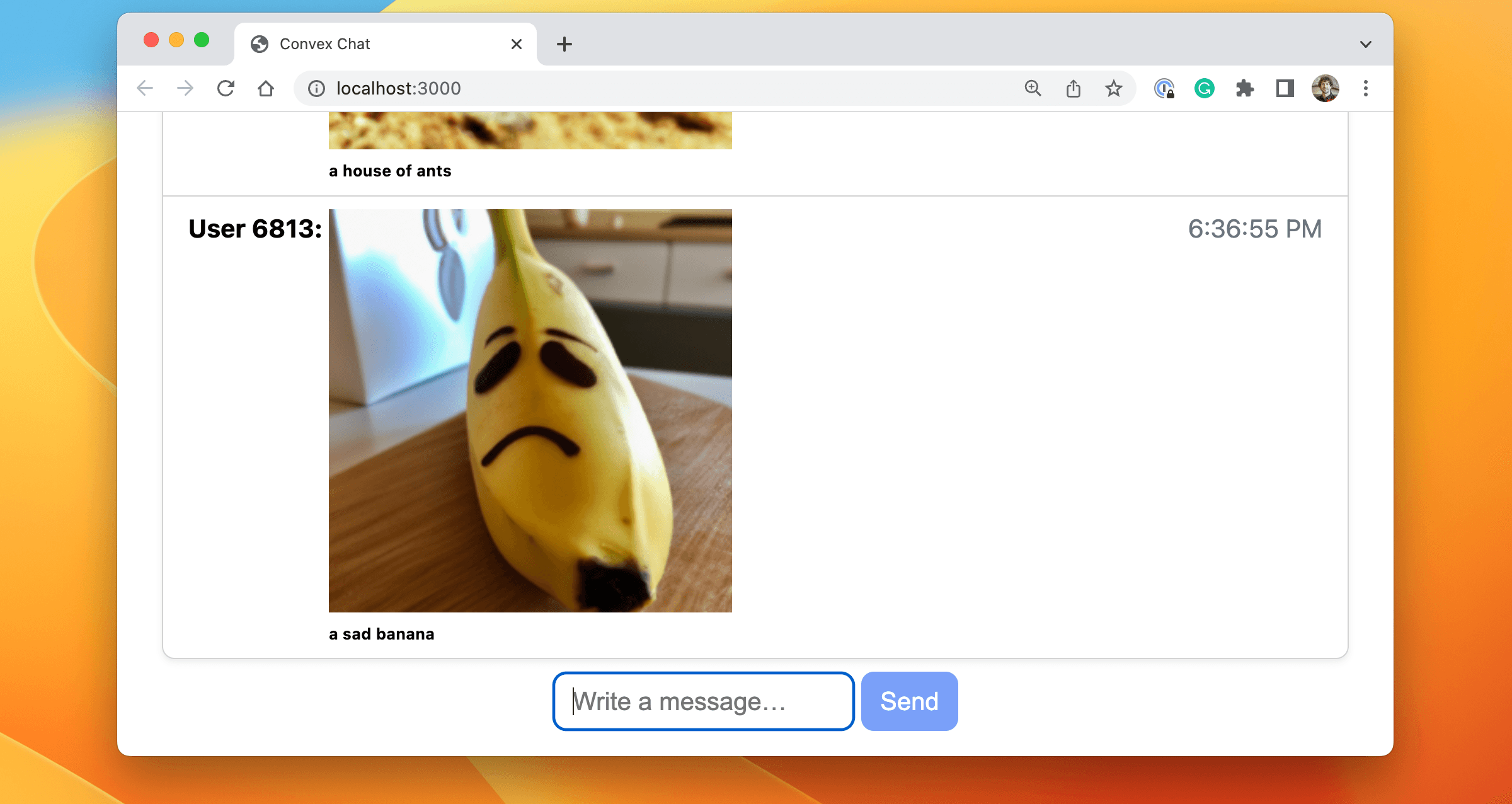

Using Dall-E from Convex

This is part of a series of posts about building a multiplayer game on Convex. In this post, we’ll explore using Dall-E via the OpenAI API. The code is here if you want to play around with it. We will make a chat app where you can type in an image description as a slash command, and it will generate and insert that image into the chat. On the server, it will:

- Configure an OpenAI client.

- Check that the prompt is not offensive using their moderation API.

- Get the short-lived image URL from Dall-E.

- Download the image.

- Upload the image into Convex File Storage, getting a storage ID.

- Insert the storage ID into a new message.

- When serving the message to the client, we will generate a URL based on the storage ID, which they can put in an

<img>tag directly.

This is now possible using File Storage and a Convex Action, a serverless function that can have side-effects1, comparable to AWS lambda. Read below for how to use OpenAI with Convex, or check out the code here.

Using OpenAI in Convex

Set up Convex

Create a Convex app by cloning the demo and running npm i; npm run dev. If you want a fresh project, see the Tutorial.

If you’re writing your app from scratch, create a file in the convex/ folder. Mine is convex/actions/sendDallE.js.

Configuring OpenAI

-

To use OpenAI, you need an API account. Sign up here.

-

Generate an API key here by clicking “Create new secret key” and copying the text.

-

Store the API key Convex’s Environment Variables, where you can store secrets. Go to your project’s dashboard by running

npx convex dashboardin your project directory or finding it on dashboard.convex.dev. Open the project settings on the left and enter it with the keyOPENAI_API_KEY. I’d recommend saving it in both your Production and Development deployments unless you want the published version of your app to use a different key. There is a toggle to switch between them. -

Configure the client in your action. If you get type errors,

npm install openai:1"use node"; 2import { Configuration, OpenAIApi } from "openai"; 3const configuration = new Configuration({ 4 apiKey: process.env.OPENAI_API_KEY, 5}); 6const openai = new OpenAIApi(configuration); 7

Using the Moderation API

The moderation API is useful for checking whether a prompt is obscene or will be rejected by OpenAI. For our purposes, we’ll check if it flags the message:

1const modResponse = await openai.createModeration({

2 input: prompt,

3});

4const modResult = modResponse.data.results[0];

5if (modResult.flagged) {

6 throw new Error(

7 `Your prompt was flagged: ${JSON.stringify(modResult.categories)}`

8 );

9}

10The categories object can be used for more fine-grained moderation. It has a score per category. Consult the moderation documentation for more details.

Generating an image with Dall-E

You can consult the image generation documentation for more details, but for our purposes, the code is:

1const opanaiResponse = await openai.createImage({

2 prompt,

3 n: 1,

4 size: "256x256",

5});

6const dallEImageUrl = opanaiResponse.data.data[0]["url"];

7Now we have a URL to the image. However, this URL only lasts for an hour. If we want to be able to see this image in the chat longer term, we need to store it.

Downloading the image

To download the image, we use the node-fetch NPM library.

1const imageResponse = await fetch(dallEImageUrl);

2const image = await imageResponse.blob();

3Now we have the image in memory and can store it in Convex.

Storing the image in Convex

Storing the image in Convex is simple:

1const storageId = await ctx.storage.store(image);

2where ctx is the first parameter to the action function:

1// in sendDallE.js

2export default action(async (ctx, { prompt, author }) => {

3 ...

4});

5Store the storage ID in the database

The storage ID returned from the upload URL is now a permanent identifier of this data. You can store it in your database however you’d like. In our example, we store it in a new chat message.

1// in sendDallE.js action

2await ctx.runMutation(api.messages.send, {

3 body: storageId,

4 author,

5 format: "dall-e",

6 });

7And the mutation that stores it, for completeness:

1// in messages.js

2export const send = mutation(async (ctx, { body, author, format }) => {

3 // A bit of a hack; we are storing the storage ID in the "body"

4 await ctx.db.insert("messages", { body, author, format });

5});

6One thing to note is that this can’t live in the same file as the openai action. Actions can run in a node environment (with the "use node"; string at the top of the file) whereas queries and mutations run in our optimized runtime (which makes them wicked fast). You can read more about that here. I put mine in convex/sendMessage.js.

Serving the image to the client

In Convex, clients subscribe to queries. For instance, in our frontend React component, we can do:

1const messages = useQuery(api.message.list) || [];

2...

3{messages.map(message => (

4 <Message author={message.author} body={message.body} format={message.format} key={message._id.toString()} />

5)};

6That query maps to a serverless function: the list export of convex/messages.js. To return the image URL in messages we return to clients, we generate a URL from the storage ID. For our app, we will change each storage ID into a URL. In convex/messages.js:

1export const list = query(async (ctx) => {

2 const messages = await ctx.db.query("messages").collect();

3 for (const message of messages) {

4 if (message.format === "dall-e") {

5 // Replace the storage ID with a URL in the "body"

6 message.body = await ctx.storage.getUrl(message.body);

7 }

8 }

9 return messages;

10});

11This URL won’t change on each request, so you don’t have to worry about caching the URL on the client. The HTTP response also has cache headers so you don't need to worry about client-side image caching either.

Performance

One thing I discovered while building this is how slow the OpenAI API calls can be. In my testing, the moderation call took 500ms, and the image generation could take 5+ seconds, sometimes taking over 60s and timing out the request. To make this a good user experience, adding a loading indicator is important. On the client, I used this code:

1// In React jsx:

2const [sending, setSending] = useState(false);

3const sendDallE = useAction(api.actions.sendDallE.default);

4...

5setSending(true);

6try {

7 await sendDallE({ prompt, author: name });

8} finally {

9 setSending(false);

10}

11Summary

In this post, we used Convex to fetch an image from OpenAI’s image generation service based on a user-provided prompt. Read about the game I've developed on Convex using Dall-E APIs here for more tips, or read the code to see how I added error handling and more.

Footnotes

Convex is the backend platform with everything you need to build your full-stack AI project. Cloud functions, a database, file storage, scheduling, workflow, vector search, and realtime updates fit together seamlessly.