Moderating ChatGPT Content: Full-Stack

Trying to build an app on top of OpenAI’s Dall-E or ChatGPT? Avoid violating the OpenAI Usage policies or showing inappropriate data to your users by leveraging their Moderation API. In this post, we’ll look at how to use it to flag messages before sending them to Chat-GPT. This will build on the Building a full-stack ChatGPT app post, but the approach is generally applicable.

New to Convex? Convex is a backend application platform - we make I,t easy to build apps where you need to run code in the server and store data in a database. We run your functions, host your data, and much more. It’s a great fit for working with powerful APIs like OpenAI, as it allows you to build a whole application around powerful AI models without running any servers yourself.

Without further ado, let’s look at how to use OpenAI’s moderation endpoints.

Initializing the API:

The core piece of code is in the convex/openai.js file. This takes less than a millisecond, so we can run it on each request.

1const apiKey = process.env.OPENAI_API_KEY;

2if (!apiKey) {

3 await fail(

4 "Add your OPENAI_API_KEY as an env variable in the " +

5 "[dashboard](https://dasboard.convex.dev)"

6 );

7}

8const configuration = new Configuration({ apiKey });

9const openai = new OpenAIApi(configuration);

10Using the moderation API:

1// Check if the message is offensive.

2const modResponse = await openai.createModeration({

3 input: body,

4});

5const modResult = modResponse.data.results[0];

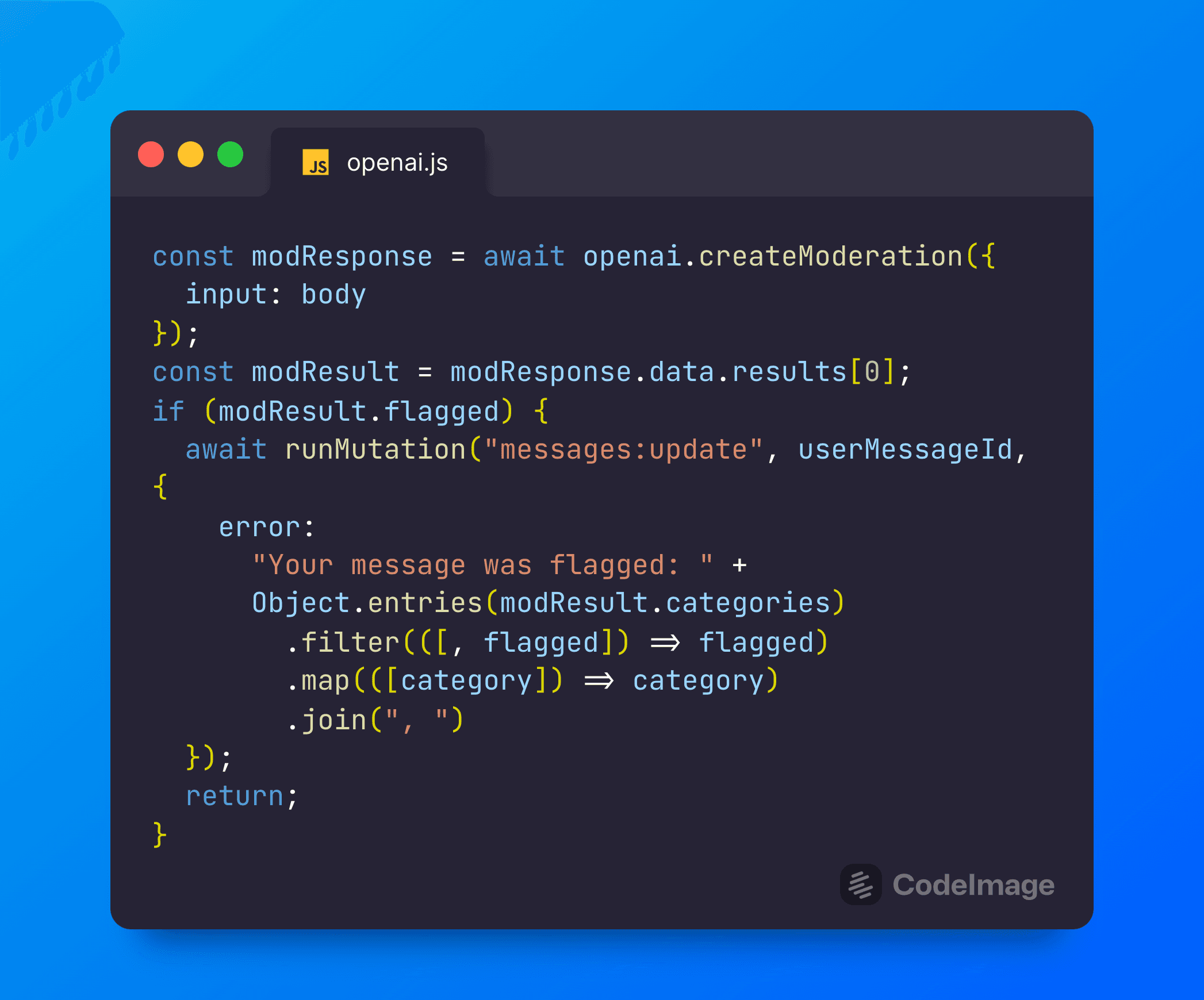

6Updating the user’s message if the message was flagged:

1if (modResult.flagged) {

2 await runMutation(internal.messages.update, {

3 messageId: userMsgId,

4 patch: {

5 error:

6 "Your message was flagged: " +

7 Object.entries(modResult.categories)

8 .filter(([, flagged]) => flagged)

9 .map(([category]) => category)

10 .join(", "),

11 },

12 });

13 return;

14}

15As a reminder, this will call a mutation in convex/messages.js that will patch the message, adding an error field:

1export const update = internalMutation(async ({ db }, { messageId, patch }) => {

2 await db.patch(messageId, patch);

3});

4Why not check beforehand?

It’s a good point that we could do moderation before inserting the message into the database in the first place. In my case, I wanted to show results immediately and commit the intent to send a message. If I waited for moderation, it would take an extra 500ms+ before adding the message and appearing in the UI. For moderating identity creation (see this post on adding identities), I moderate first for simplicity. I return an error when the user tries to create a lousy identity. If it felt too long, I could add a spinner while creating the identity. One nice thing about Convex mutations and actions is that you can await the results in the UI to know when they succeed/fail, even if you don’t care about their return value. For instance:

1setLoading(true);

2setError(null);

3const errorMsg = await addIdentity(

4 newIdentityName,

5 newIdentityInstructions

6);

7if (errorMsg) setError(errorMsg);

8setLoading(false);

9Refreshing the client’s copy of messages with the new field:

This happens automatically! One of the cool things about Convex is that queries are reactive by default. Here’s how it works:

- We originally fetched a list of 100 recent messages in the UI with a query called

listinconvex/messages.js, which runs in Convex’s cloud. This result is cached automatically, thanks to guarantees provided by Convex’s deterministic runtime. - When we sent a new message, we inserted a message into the “messages” table.

- Because the

listquery was for the most recent 100 messages, the results were automatically invalidated and re-executed. The new results get pushed to clients over a WebSocket, triggering a React render for the component(s) that calleduseQuery(api.messages.list)with the new messages. - The same thing happens when we update a message to add an

errorfield. As if by magic, the message with theerrorfield shows up in the UI and we can render a different UI.

Rendering flagged messages in the UI:

To avoid showing flagged messages, we can do some standard React gating:

1<span style={{ whiteSpace: "pre-wrap" }}>

2 {message.error ? "⚠️ " + message.error : message.body ?? "..."}

3</span>

4Here we show an Error with ⚠️ pre-pended if there is one, otherwise a body if there is one, otherwise “…” for messages we haven’t updated with a bot’s response.

Filtering flagged messages out of future bot input

We avoided sending the message to ChatGPT when we initially detected bad input. Still, when we send our next set of messages to the API, we don’t want to include the bad input in historical messages either. To do that, we can adjust our filter in messages:send:

1const messages = await db

2 .query("messages")

3 .filter((q) => q.eq(q.field("error"), undefined))

4 .filter((q) => q.neq(q.field("body"), undefined))

5 .take(21); // 10 pairs of prompt/response and our most recent message.

6We avoid getting any messages with an “error” field and avoid all the messages without a body, for instance, bot messages that weren’t updated (such as when we bailed due to flagged input).

Summary

In this post, we looked at how to moderate what content you send to ChatGPT, from the UI to the API calls. I hope it was helpful. Let us know in Discord what you think!

Convex is the backend platform with everything you need to build your full-stack AI project. Cloud functions, a database, file storage, scheduling, workflow, vector search, and realtime updates fit together seamlessly.